A question that regularly comes up in ETL scenarios is whether SQL Server can read and write faster if you divide your tables and indexes over multiple filegroups and your filegroups over multiple files. My standard thinking on this was always as follows: Depending on whether you can write those files to different disks or not this will be the case. In a single disk scenario it should not matter at all. I started out setting up an experiment to compare the performance of different file and filegroup configurations.

While working on this and running into some interesting problems, I realized that the better question to ask is: “How fast can I practically write to disk, and why am I not reaching those speeds with SSIS and SQL Server?” This post discusses the issues I ran into while getting a simple ETL process to perform at a reasonable speed but also to run at the same speed every time I ran it with the same exact parameters. In a follow up post I will turn back to the initial question of whether it makes sense to split up your database into multiple files.

Test scenario

I setup a very simple data warehouse scenario that runs two steps:

- A big file is extracted into a staging table

- The staging table is loaded into a datastore table using an insert statement

Theoretical speed

How fast can this be written to disk? I looked up the benchmark of my disk on harddrivebenchmark.net: It should be able to write about 4000 MBs per second, implying we should handle this ETL process in a few seconds. In practice I am also running Windows on the same disk, it has bitlocker enabled, virus scanning etc. So let’s see if we can get a more practical speed benchmark.

Generation and subsequent reading of test file

The experiment needs a test file to load. I wrote a script to generate test data and I measured the writing speed:

- Write speed 2 GB file

- Duration: 96 seconds

- Speed: 21MB per second

- Write speed 4 GB file

- Duration: 195 seconds

- Speed: 21 MB per second

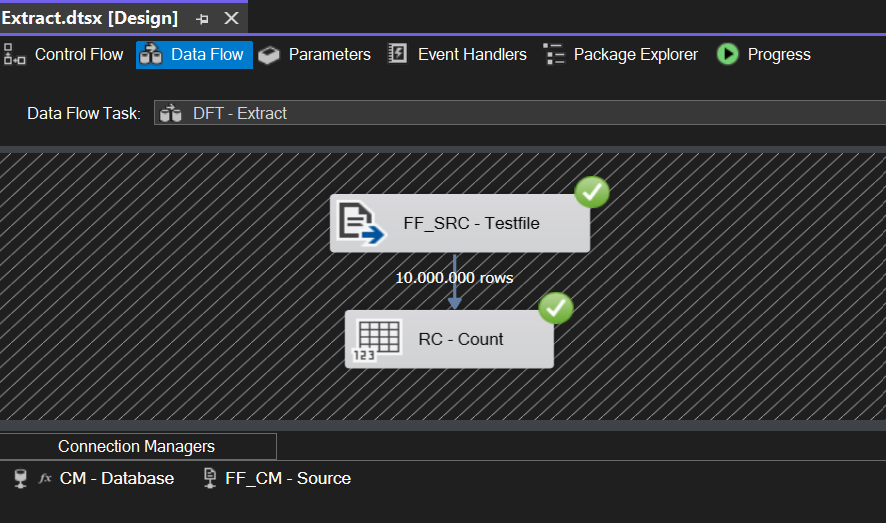

- Read speed of the complete file with SSIS was tested by connecting a flat file source to a counter.

- 2GB: 10 seconds

- 4GB: 18 seconds

It looks like the the reading is not a bottleneck. In the measurements it will be assumed that writing to disk starts immediately after the first rows are read from the file. We are writing the same amount of data into a table three times. We should be able to do this in 96 * 3 = 288 seconds for the 2GB file in a single thread.

Experimental variables

After ensuring the basic setup of the package would not influence the performance randomly I could set up the experimental variables. I used the following :

- Tables in same file or different filegroup

- If tables in different filegroup would it matter to split the filegroups up in multiple files

- Use of balanced data distributor to get parallel insert in the extraction phase

- Number of destinations for balanced data distributor

- Dataflow buffersize

This led to 28 * 9 = 252 scenarios. This is is a bit of a large amount but luckily the whole test is automated. You can find all scenarios in my git repository: https://github.com/pieterdelange/SQLRWPerformance.

Practical speed

After running the experiment on my laptop I found that I was able extract and load a 2GB file in about 50 seconds and a 4GB file in about a 100 seconds. Maximum SQL Server processing speed for the whole ETL process in this setup can thus be expressed as roughly 2 times the single thread write speed of my laptop. In this time the file is written to disk three times:

- From file to staging

- Index on staging table

- Datastore table

Calculating this back to write speed: (4 GB * 1024 * 3) / 100 = 122 MB/s on average for the three stages of the ETL.

Keeping all else equal

The biggest issue I had was to get rid of the variance in the SQL Load process from the staging table to the datastore table. The performance variance was big enough that, depending on chance, a clearly better setup could accidentally perform worse than a bad setup. I investigated this first using queryplan. This showed me that sometimes there was a high waiting time. I used Capturing wait stats for a single operation – Paul S. Randal (sqlskills.com) to look into this further. The logging files reached into the 200MB sometimes and showed a lot of waits. My initial conclusion was that I needed to set MaxDop to 8 but this did not help. Reading Knee-Jerk Wait Statistics : CXPACKET – SQLPerformance.com gave me a better understanding of parallelism.

Without index there are no statistics for the engine to understand distribution of rows in the table. This may make it hard for the engine to understand how it must distribute the work across multiple threads for parallel insert. Which could explain this variance.

To prevent this issue I put an index on the staging table. This indeed removed the variance from the performance but it still did not give the expected throughput. The queryplan for the load process showed that parallelism was not used yet. An interesting read here: Real World Parallel INSERT…SELECT: What else you need to know! – Microsoft Community Hub. I realized that the index I had on the datastore table was preventing the engine from being able to use parallelism. I removed that index and now it looks like the query and tables are setup correctly for comparison.

Initial conclusions (or open doors)

- For parallelism to work the table you are inserting into must not contain a row store index

- The engine must know something about the source data your are loading so it can distribute work in the most optimal way.

- This means statistics, which means index

- Balanced data distributors increase the speed of file extraction process in SSIS

Experimental setup

- Database

- SQL Server 2019

- Simple recovery

- Staging table has it’s index dropped prior to extraction process

- Before loading from staging into datastore an index is put onto staging table

- Datastore table has no index to enable parallel insert

- Computer

- 16 GB memory

- 14 Cores

Limitations

For practical purposes this experiment has many limitations which can be improved upon. I will list a few that came to mind below:

- Testfile

- Sequential values in the rows instead of random values

- No columns with repeated values like a boolean Yes\No

- Not a lot of different data types used

- Adding or removing columns will impact results

- Database

- Index type used could be improved or made a variable in the experiment

- The type of index and how much improvement it will give is also related to the data in the test file itself.

- Randomness

- The measures taken to eliminate randomness in the experiment may have led to overall sub optimization. If we allow for some randomness in our performance it may on average lead to faster speed.

- Measurements

- Speed calculation are all relative so you’d have to perform your own experiments to arrive at what would happen in your own environments

- Calculating back the speed to MB’s per second is not an indication of raw IO speed of the disk achieved during the processing. This in fact may be more or less depending on the efficiency of the setup. I’ll include some graphs from resource monitor in a next blog post.